Study Makes Disturbing Discovery About The Prevalence Of Korean Actresses And Singers In Deepfake Content

Content Warning

In the modern age as AI becomes a more prevalent part of our society, the danger of deepfake media — described as “a video of a person in which their face or body has been digitally altered so that they appear to be someone else, typically used maliciously or to spread false information” — has become increasingly worrying.

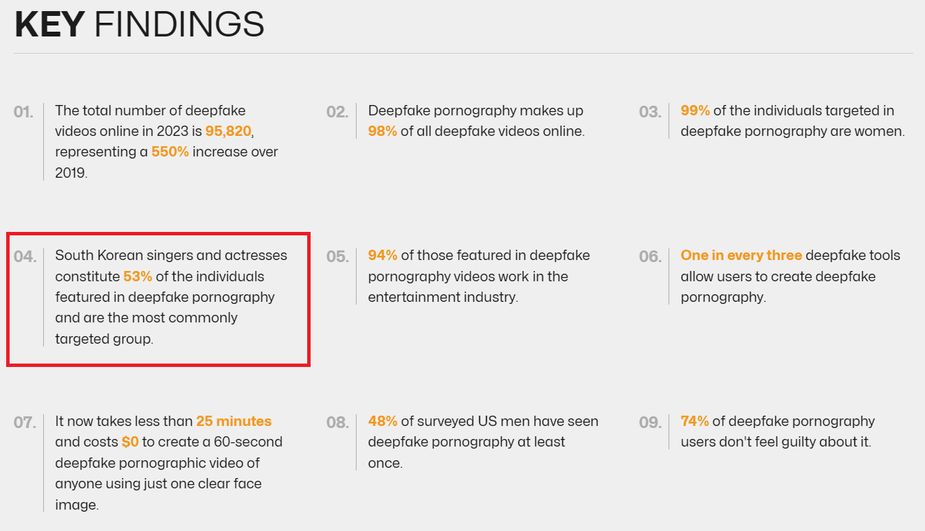

While there are non-malicious ways to use deepfake technology, the overwhelming majority of content created this way is pornographic in nature — in fact, a whopping 98% of deepfake videos are pornography, according to a 2023 report by Home Security Heroes. Also according to this report, there were a total of 95,820 deepfake videos online in 2023, which is a 550% increase over 2019. And of the 98% of these videos that were pornographic in nature, 99% targeted women.

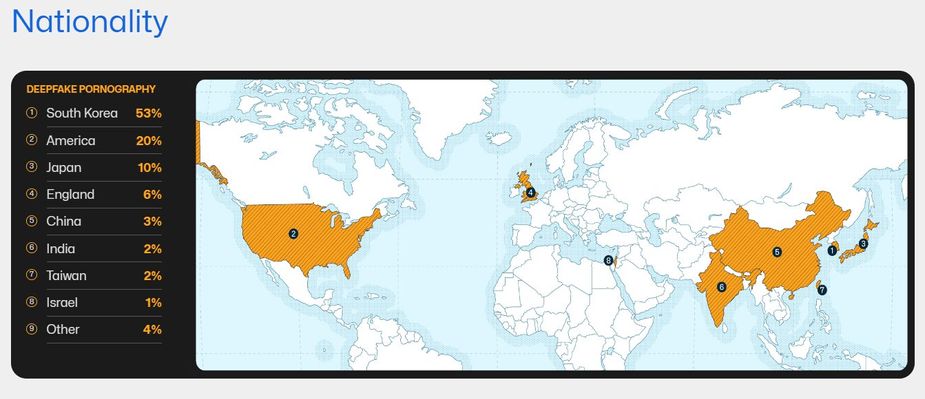

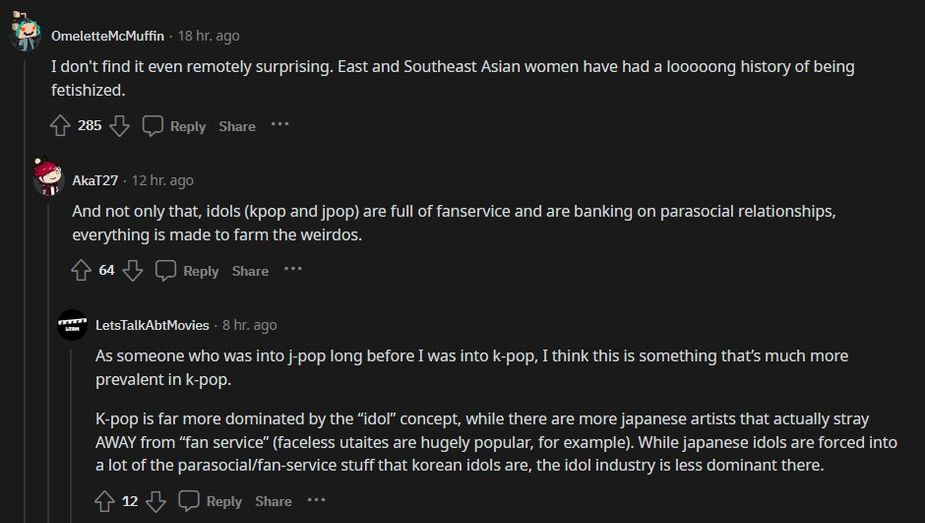

And the demographics are particularly worrying for South Korean women, especially those in the entertainment industry. South Korean actresses and singers make up an astounding 53% of individuals targeted in deepfake pornography, making them the most-targeted group by nationality and gender.

Here is a look at the key findings in the 2023 report.

The second-most targeted nationality, according to the study, is America at 20%, while Japan is third at 10%. That means South Korean women are targeted over twice as much as the next most at-risk group.

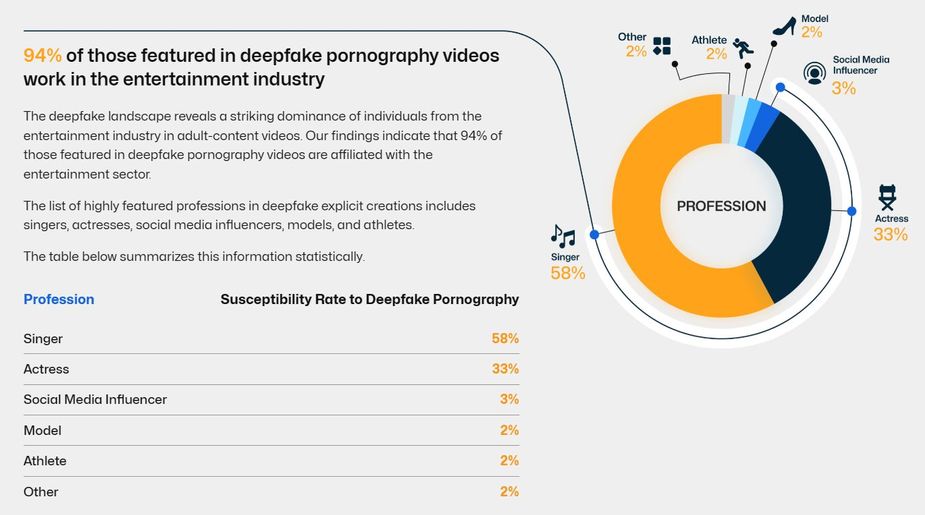

And of the people featured in deepfake pornography — not just South Koreans, but individuals in general — 94% work in the entertainment industry as singers, actresses, or social media influencers.

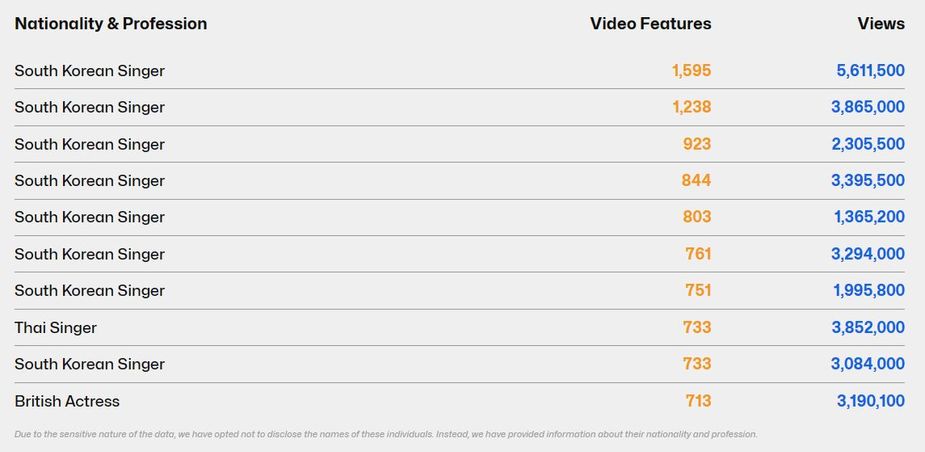

The report also listed the ten individuals who are most often targets of deepfake pornography, and while their identities were not disclosed due to the data’s sensitive nature, their nationality and profession were included. Out of these ten women, eight of them were South Korean singers.

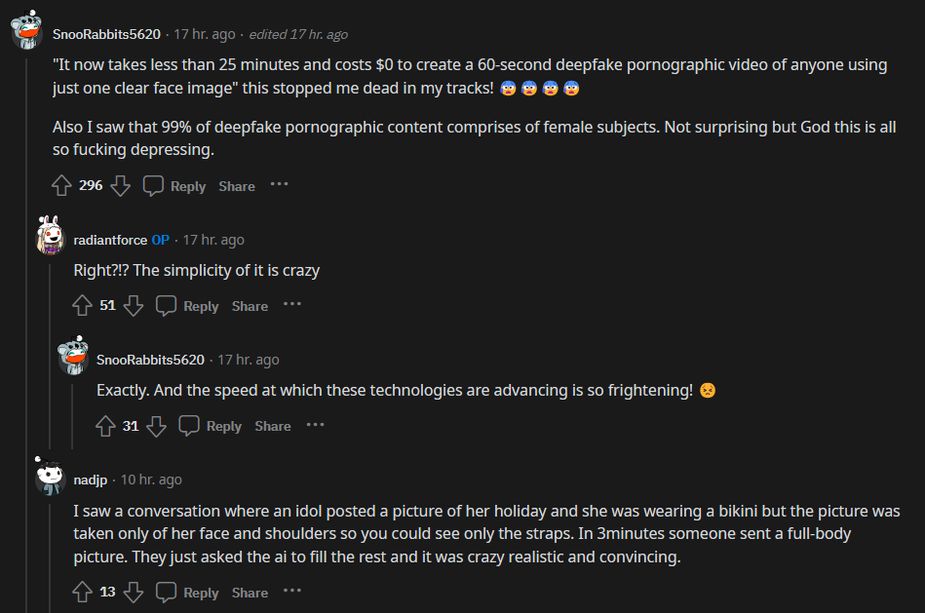

The study lists several other shocking and disturbing revelations, such as the fact that it can take less than 25 minutes and cost nothing to create a minute-long deepfake pornographic video from just a single, clear face image. The total video views across the top ten dedicated deepfake porn sites in 2023 was over 303 million, showing just how prevalent this kind of media consumption has become despite the moral, ethical, and legal complications surrounding it.

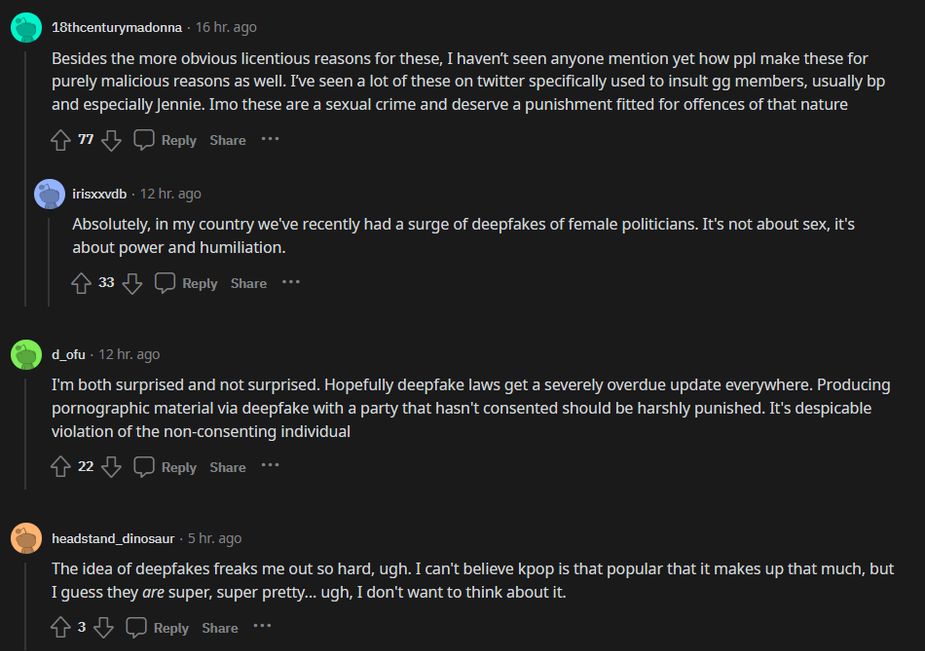

Home Security Heroes’ article was shared on the K-Pop subreddit recently, since there was such a prevalent part of the study that targeted South Korean entertainers. Here’s how people were reacting to the disturbing news.

As the study concludes:

These findings emphasize the multifaceted nature of deepfake technology and its impact on various domains. As a result, there is a pressing need for responsible usage, ethical considerations, and regulatory safeguards to address the evolving challenges that deepfake content presents.

— Home Security Heroes